Digitizing assets

Short version

While working at Birds.ai I:

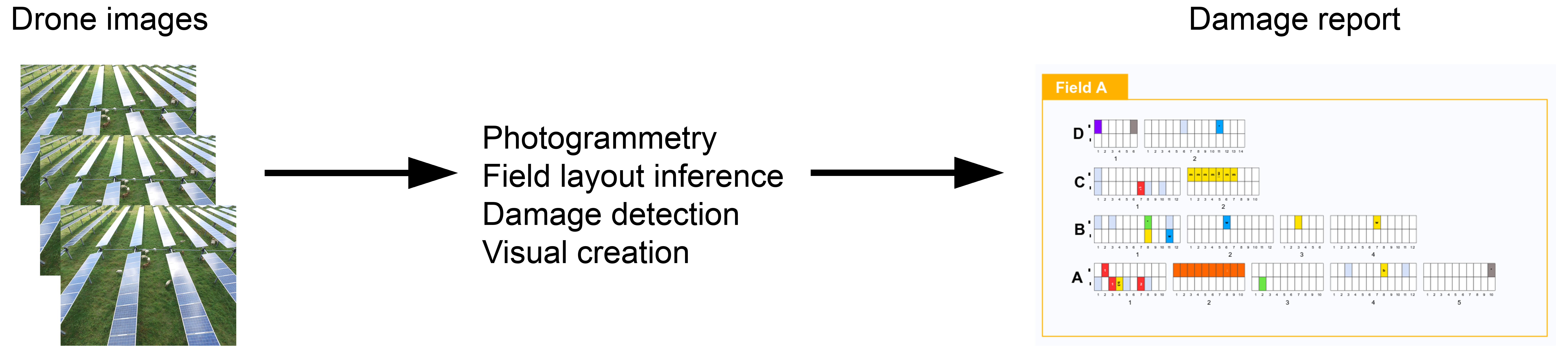

- Built a pipeline with photogrammetry, machine learning, and classical computer vision to transforms drone images into damage reports.

- Trained and deployed high-performance damage detection and segmentation models for wind turbines and solar panels.

- Migrated detection applications to RetinaNet models and implemented U-Net for segmentation.

- Wrote software for handling large volumes of annotated data.

- Implemented prototypes for manufacturing lines.

Long version

As part of a university project, I wrote a crop detection algorithm for a company called Birds.ai. While the solution used basic features and a support vector machine, they hired me to work on more modern computer vision technologies.

The first problem I tackled at Birds.ai was moving the detection models from Faster R-CNN to RetinaNet. RetinaNet was only a few months old at the time, but as one of the first highly accurate single-stage detectors it promised simple training procedures and model deployment.

After the successful model transition, I began work on a solar field damage report generator. The goal was to have a fully automated pipeline that transforms drone images into an annotated field overview. An essential ingredient turned out to be structure-from-motion photogrammetry to create orthomosaics. These orthomosaics, or perspectiveless stitches of many drone images, formed the basis for the damage detection and the field layout inference. The thumbnail gif for this article is a 3D model of a solar field that was created within this pipeline. Most of my time at Birds.ai was spent working on this project.

My final months at Birds.ai were spent implementing smart manufacturing line prototypes. These included a safe worker distance detector and a conveyer belt object counters.